TC Meeting Minutes 2020-2021

Wendy Thomas

Jon Johnson

Darren Bell

Mary Vardigan (Unlicensed)

ATTENDEES: Wendy, Jon, Jeremy, Dan S., Flavio, Larry, Ingo

regrets: Darren, Oliver, Barry

Ingo contact from Scientific Board

- Improve contacts with working groups so that they have a better sense of the ongoing activities of the working group

- Help report and facilitate work of the group, seek help where needed (resources etc.)

- TC felt that it would be helpful to have that outside/neutral reporter

Technical/Implementer focused documentation

- Possible working group

- Set up a couple of implementer meetings for brainstorming

- Prepare materials - what kinds of things people finding hardest to understand

- useful easy to generate information

- ACTION: Prepare something for early January

In-person meeting update

- Jared stated that we will need to reapply for money in new year

- Space OK

- Make clear how a virtual work event will affect the agenda of in-person meeting

Virtual Work Event on COGS in spring

- Half days might work better

- April would be not bad - follow up in face to face

- COGS:

- Validation input to CSV and then CSV to output implementations

- Decisions regarding changes to input (choice etc.)

- Serializations and implementation languages JSON, OWL, XMI, XML

ACTION: Clear statement of what needs to be done and when

DOI follow-up

ACTION:

- Send out the note to all users with link to JIRA issue tracker

- What is on their wish list - what and how do they need to cite

- DOI for top level DDI Alliance site as a project

Mailman follow-up

ACTION:

- Write up a piece and then lateral to Marketing

- If we go to Google Groups (MGroups format)

- Marketing team if they want to have community discussion web site

- Or do we just want email make decision before moving platform

Quick discussion of upcoming Scientific Community meeting

- Not the annual meeting (bylaw triggered meeting of reporting and review of scientific plan)

- 2 Scientific Community meetings for community motivation

- Some topics that are being addressed in groups but need broader expansion

- URN resolution, mapping within suite, mapping with outside standards

- Generate interest and targeted activity and participation

- There are plenty of proposals in the scientific plan that could be chewed on

- Doing advertisement from TC to things we want to create or promote

Extra Action Item:

- EDDI videos will be turned around quickly as received.

- Jon will follow-up and notify us when the update on Agency Registry is ready for reference so we can make announcements

ATTENDEES: Wendy, Jon, Jeremy, Dan S., Flavio, Darren, Oliver, Larry, Johan

AGENDA:

CV update from CESSDA

- Update on export of data from the CESSDA system so that we can work on import process

- Oliver has had conflicts the past month hopes to have something for the end of next week

- Carsten is signing on with Slovakian group to fix issues

- Darren has meeting with Carsten and group to focus on design

- Will need to review what the versioning model looks like

- Manage in CESSDA tool and export for the purpose of publishing - its a one-way path

Collectica registry enhancement

- Write documentation that can be put on the site

- When video from EDDI is available we can provide link as an introduction

DOIs for high-level documentation

- How do we organize the document (DOI for each full document or parts)

- How do we handle versioning

- Using the established DDI use of

- Purpose of the DOI is to use in citing documentation and should be to the published PDF; the sphynx online version is the development electronic document

- Main point of DOI is that it is immutable so DOI could be a root document that may include a change log

- Difference between a journal article and our documentation is enforcing what happens inside the {document

- The purpose is to provide a pointer to the document

- Need to clarify a versioning policy - we have to be careful that we are clear about what constitutes a new DOI and how content is packaged

- What do we want to put a DOI on? the thing we publish is the standard is the schema (including field level)

- The documentation needs some connection to the schema as it is separate

- What is the purpose of a DOI and is it the most appropriate? what needs to be covered? what's the best was to do this?

- Link is a citation

- API referencing guide for standards is the URL of the agency in this case ddialliance.org....so does that need a DOI

ACTION:

- We've had a number of requests so lets draft something up internally

- Start a JIRA ticket in TC and put it out to the user group sent out a note to DDI User group and add a JIRA ticket or send an email

Repository organization for standards and product generation tooling

- As the CDI document gets more finalized keep group informed

- Discuss intent of document with Achim

Timeline for DDI-CDI - estimated to be provided to TC Jan 19

Face-to-Face meeting planning

- Contingent upon travel restriction and approval processing

- Check on contingency of moving money to next year, tentatively July

- Check on space availability

- Do a virtual thing to put some time aside to work on COGS and get that done

- Transition planning - brainstorming session with moderator; leadership, organization, future proofing technical and processing aspects, shifting to management of a suite of products as opposed to a single standards

ATTENDEES: Wendy, Jon, Darren, Oliver, Dan S., Larry, Flavio, Johan, Jeremy

Mappings:

- division of work

- TC should drive product mapping

- Who should be driving mapping of different types

- How to coordinate with work taking place with other standards such as GSBPM, CoData, SDMX etc.

TASK:

- What needs to be finalized on the high level model?

- Expand on the what is on the page - how would this work?

- How do we describe relationships at different levels (conceptual to automated transformations)

- Bring a proposed framework to the SB

- Wendy, Flavio, and Jon - pull our stuff together and discuss with Ingo in liaison role in terms of meeting our goals

CV versioning of URI in CV manager

- Oliver and Darren have talked to Taina - there are discrete version numbers in CV manager

- Notification mechanism to follow our versioning processing

Clarifying points:

- Use of names in the URI for concept ID

- Break between what has been done and what is convenient

- Why is the version part of the short name rather than a separate section

- Flexibility to do what we need to do - URIs being generated for the CESSDA specific CVs versions are brought out in an entirely different way

- If we can't get it as part of the output parameter we can (worst case) adjust this using regEx magic in transformation

- Prepare a document containing final proposal on what the URIs should look like in the end. This includes the base content, wild card mechanism for version or vocabulary trim name

- ICPSR is currently running the web site - DNS a sub-domain would be desirable

- Is there a requirement to support Sparkle end point query? would support distributed queries, could easily be added in the future if requested

- Is there a use case for rest API? not currently, even weaker argument than for Sparkle

Would there be future support for Statistical Classifications? - Do we have a flat access to codes or hierarchical?

- Darren and Oliver is talking with Taina, John Shepardson and others working on the CV Manager

ADMINISTRATIVE:

No meeting 25 Nov, and 2 Dec

Next week Codebook

ATTENDEES: Wendy, Johan, Larry, Oliver, Dan S., Darren, Jon, Barry, Flavio

Resolution work update:

- In touch and prepared schedule for specific work

- Transformations up and running mid-November

- Loss of Bitbucket Services feature should be no problem - webhooks, services may have been used with Lion

HTTP based work is planned to be completed by EDDI

Mailman lists

- Mailman history is being used to search for stuff

- Is there a way to preserve the history in Google Groups through a transfer - ACTION: Wendy check on this

- Discourse is a bulletin board and email list combined https://www.discourse.org/

- Who would set Discourse up - there would be a cost for this

- https://discourse.mozilla.org/ example

- open source but cost to hosting or paying someone to host - 85% discount for educational

- Google groups is used by Developers Group with no problems

- The broader question is whether a browser blog easier to see the conversations and not need to join the group

- $15 a month isn't really an issue

- ACTION: Summarize this discussion and contact Jared (loop Dan in this discussion)

CODEBOOK work

- Simple to Conceptual

- Highlight in the public review

- Document explicitly in inline documentation

- NCube alignment - addition of attribute and cohort region

NO Meeting on 25th November

Topic for next week:

- Mappings across standards - structure the work on this, type of mappings, etc.

- Oliver and Darren are having a meeting on versioning URI in CV manager

ATTENDEES: Wendy, Jon, Dan S., Oliver, Darren, Flavio, Johan, George (first topic only), Barry

AGENDA:

- Codebook issue resolutions

- CDI update

- RDF Resolution update

Codebook-

- The SDTL set of terms and properties for recording complex survey weights that elaborate on Stas' issue

- Stas has been making comments but in general seems to agree with the content

- It's a complex framework and it would be helpful to have insight from those involved in complex survey weights from various locations

- Its the complexity of properties that need to be considered and it would be helpful to have more comments

- It would be helpful to contact people first

DECISION:

Review of content that is product independent - do this first and then put in a future version of Codebook; it would be good to look at this from the perspective of Lifecycle which is not just descriptive of past actions

https://docs.google.com/document/d/1wIBolHEKTi5_JujKBkdr2s92PVpQKp_z8tyPbTqQV1E/edit?usp=sharing

Review of use in Codebook as a separate process - beta version 2.6 and then official release

ACTION: create JIRA issue for separate review of weighting content to track who is being asked to look at this (TC-225)

CDI Update:

- Liaison with W3C (will be on next SB agenda) - One of things at Dagstuhl Pierre Antoine was starting work on CDI RDF representation but there was some issues on the model

https://www.w3.org/2001/11/StdLiaison

Approaching a form of liaison with DDI through the Scientific Board - RDF styles across the suite - how much uniformity and how much individual flexibility should be supported

- Internal DDI vocabulary - with extension structures (this appears to be the case)

Keep track of what is going on here

RDF Resolution:

- HTML output - work with Sanda and Michael

- Current content is very out of date - recommendation is to hold until resolution is complete

- Is there an option for producing static content (SKOS file, HTML, CodeList-XML) and then loading into triple store when that is ready

- We need to nail the path down...currently http://rdf-vocabulary.ddialliance.org/[product name] also https://...

- HTML page needs to be a joint process with Michael

- It would be one of the products in the bitbucket repository where Michael could retrieve it to update

- That would be ready in a few weeks

- Michael was seemed fine with working with a git repository

Added content areas from MFP work:

- Content would come after upgrade - Set up JIRA issues regarding the broad areas we want to look at and identify location of input materials - get wish list together and review wish list (filed TC-223)

- Follow-up from last week:

Filed TC-224 Documenting procedures governing management of requirements, development work, feature roadmaps, etc. to capture review of existing documents

Sent to Oliver and Darren: (questions or possible issues in the RDF resolution work from Wendy)

Right now this domain is managed by ICPSR(Michael) rdf-vocabulary.ddialliance.org

Should this be the URL associated with int.ddi? or should each product have its own subagency (ddi.lifecycle, ddi.codebook, ddi.xkos, ddi.cdi, ddi.sdtl)

Does SDTL currently use a URN or just the rdf-vocabulary.ddialliance.org?

XKOS

RDF namespace: http://rdf-vocabulary.ddialliance.org/xkos#

XKOS documentation: http://rdf-vocabulary.ddialliance.org/xkos.html

RDF Turtle file: https://rdf-vocabulary.ddialliance.org/xkos.ttl

Controlled Vocabularies (note that Controlled vocabularies has its own subagency which is actually "int.ddi.cv"

Short Name:AggregationMethod

Long Name:Aggregation Method

Version:1.0

Version Notes:

Canonical URI:urn:ddi-cv:AggregationMethod

Canonical URI of this version:urn:ddi-cv:AggregationMethod:1.0

Location URI:http://www.ddialliance.org/Specification/DDI-CV/AggreagationMethod_1.0_Genericode1.0_DDI-CVProfile1.0.xml

Alternate format location URI:http://www.ddialliance.org/Specification/DDI-CV/AggregationMethod_1.0.html

Alternate format location URI:http://www.ddialliance.org/Specification/DDI-CV/AggregationMethod_1.0_InputSheet_Excel2003.xls

Note that the current URI's are in error and do not/will not resolve

What needs to be done about this? Short term, long term

ATTENDEES: Wendy, Flavio, Jon, Dan S., Jeremy, Johan, Larry

CDI update:

- CDI will not be delivered until end of December

- Achim needs to work on the production process and making it transferable post-retirement

- Only XML representation in initial package - RDF and JSON need to follow quickly, need to make sure it is in line with the resolution system

Broader coordination of implementation formats across the DDI suite:

RDF and JSON representations:

- How coordinated do we need to be across the board in DDI products?

- We have XKOS already and we have the style of output from COGS for RDF and JSON, CDI is also planning this as a quick follow up to initial XML release

UML modeling:

- Role in DDI suite

- Output from COGS - goal is canonical XMI

Discussion:

- It would be worthwhile to discuss sustainability of CDI post initial release with CDI - both the XMI content and also the production stream

- Relook at the platform independent and platform dependent approach - The current structure has been collapsed into a single model approach for expediency.

- RDF is getting pushed into the model similarly to how XML was done in the past again partly due to the collapse into a single model

- This differentiation doesn't have to happen before publication but there are RDF issues that need to be addressed - how modeling supports the RDF

- There may be separate models to support the RDF

- CDI vocabulary - not looked at mapping and since you can mix and match in RDF mapped content could then be used - RDF is being generated from the model in a perscriptive way. Its not clear you'd like to be chasing a changing external world as part of the specification. There is a referencing mechanism that helps support flexible use of or relationship to an external vocabulary

- Requirements are similar across the suite of products but we need to look at them as a suite in terms of approach

- What is the work product and how does that relate to being a standard?

- Lifecycle where the canonical product is currently XML and are moving towards a model with multiple implementations. So what is the standard? The model? the implementations?

- Use specific implementations of CDI and the XMI is the standard and the implementations are the recommendation of XML, RDF, JSON...

- Lifecycle is moving toward where COGS csv files expression of the model with XMI, XML, RDF, JSON, etc. expressions (serialization agnostic model)

- XMI is in the same position as the XML as there are things that are done because its UML. XMI is a UML representation that is intended to be portable (same conceptual scheme so you can return to the model loselessly)

- There could be an exact mapping between csv and xmi to capture the needed xmi content that are not currently in COGS.

- There is a document on the use of UML and the XMI output in CDI that is close to finalization

Management of requirements and integrated framework:

https://docs.google.com/document/d/1TEy2zdxfARDgkOABb6kzuAmHK2oBJ0f5CmxL9F9pEbQ/edit?usp=sharing

Issue presented:

- Better management of requirements and integrated framework

- How to bridge devide between the products and make decisions about them

- How do we look at the requirements and make roadmaps

- This needs to be formalized as the complexity of what we are trying to manage increases

- There is change management required all over the place

- How we capture requirements in the past has varied and can get lost over time. Thre is no good systematic was of recording and maintaining what was done and why.

Discussion:

- We have a process for lifecycle and codebook of what changed and why and we need that across the board

- For example this got captured in Codebook 2.5 to 2.6

- Similar in Lifecycle

- Working groups are a functional aspect of that in terms of implementation

- Concerns with the XMI is that its not very straight forward to doing that - tracking changes

Regarding versioning of UML: https://sparxsystems.com/enterprise_architect_user_guide/14.0/model_repository/versioncontrol.html - For XKOS there is tracking with GitHub

- For SDTL there is tracking with GitHub

- Integrated approach - a bit more than product specific approach

- The shift of CDI from development mode to produciton mode may be more useful

- Some of the discussions about Lifecycle have been when do we bring in development from work done in the Moving Forward Project. We have this on our agenda and need to get this moving.

- There is a need to address a strategy in terms of requirements in terms of product creep

- Entities that look too much inward end up disappearing - a need to look both at the current users and the growing environment and what needs may arise from that external group

- Standards meeting need of particular domain and others that support across different standards as opposed to the One Ring to Rule them All

- We need to capture and management change information between versions of XMI particularly if this is where development is taking place

ACTION:- There are existing process document for the TC and updating that and pull this into that document

- Terms of reference with the Scietific Board

- Look at how that document can be updated to reflect these issues and update processes

- Policy and decisions are done during recorded chat etc. Look tools that can help coordinate this material.

- There has actually been a lot of work done on this over the years and it needs to be organized so it is clearly available, updated, and reviewed for gaps as well as new needs given change to a suite of products and changes in the environment and user groups.

ATTENDEES: Wendy, Dan S., Flavio, Johan, George, Jon, Darren

Question regarding handling default values by validators

- Should be done in the tooling

- the tool (editor/validator) should simply provide the default value

DDICODE-52 and related weighting and sampling issues

- parameters that used with weighting when you reuse the data

- written from the point of view of the user - detailed guidance

- Stas idea of DDI providing guidance

- Creation of data set - expectations

- This would be inside a DDI Codebook or Lifecycle and plan is already there

- SDTL would provide a way to capture all of these elements and pass them into

- In Lifecycle perspective in principle if you are doing the strata you'd already have it in Lifecycle and can use it to populate this information

- there is not yet a smooth workflow where you specify --> implementation --> alterations --> result

- SDTL should be able to capture the analysis

- Language is the same between the how did I do it to how should I do it

- Should this info on samplingDesign belong in both places

- Different shots of views from planning, implementation, effects

- Long term covering original plan, what it turned out to be.

- Short term for codebook -

- need a good descriptive of the sampling process

- guidance on how to analyze the data in that data set -

- Stas' original point is important - make it easier to use complex weights (cluster, replicate, etc.)

with examples- provide sufficient content and providing examples

- Documentation is critical - on the side of producers they all know this stuff - the statistics is well understood

- Boils down to equations and math

ACTION:

Jon - will find people familiar with panel studies, clustered stratified to review this content

ATTENDEES: Wendy, Jeremy, Dan S., Barry, Jon

Technical Work Updates:

- LOD setup update

- Agency Registry work before EDDI

Codebook:

- Stas's example on how he was working on and can't be descriptive of the standard

- George's is much more detailed

- Find people who have worked with weighting and run it by some other people and see if its works

- Write up and find specific people to review

Resolution Document

- Streamline, limit to what the DDI Agency Registry does and coming updates

- Limit agency option to statement that they can provide a range of services from static page to full-negitated resolution system

- As agencies begin providing access at varying levels provide links to the options (similar to xml examples, tools, implementers, etc.)

ATTENDEES: Wendy, Jon, Dan S., Oliver, Darren, Larry, Flavio

LOD document:

- Clarify URL for CV and vocabularies

- Michael could set up any redirects required

- int.ddi.cv agency name

ACTIONS:

- write up note on costs based on comments

- Offer Darren and Oliver/GESIS service for set up and use next year

- Send to Jared - PDF of above document sent 2021-09-17

- update TC Statement on Resolution for review by TC members

Agency Registry Review Criteria:

- Reviewed document and discussed the intent of DDI in terms of registration - Broadly accepting

- Current system for addressing Robot filing

- Approval process basics

- Terms of use option

ACTIONS:

- Write up current basic verification and approval process

- Summarize Terms of Use issues and forward to Scientific Board for clarification and encoding of Terms of Use for Agent Registry

ATTENDEES: Wendy, Jon, Darren, Oliver, Dan S., Larry, George

- The CESSDA CV manager is being sub-contracted out and so change in their system is going to be slower for the near future

- The output from the CV manager can be put into a richer environment

- Bring XKOS into LOD environment

- The path described will get us there

- We need to not focus on what CESSDA will do, depend on it as a management platform not a dissemination platform

- We can massage the output from the CESSDA tool to correct URIs and create the CodeList and HTML page for DDI

- Add some specific LOD reference examples to the resolution document

- Additional specific comments were made on the documents

ACTTION:

- Complete costs section of LOD-Infrastructure document

- Add suggested areas to Resolution document and respond to all comments

ATTENDEES: Wendy, Dan S., Barry, Oliver, Larry, George, Jon

Discussion of document specifying work needed to implement a DDI resolution system for CVs and RDF vocabularies

https://docs.google.com/document/d/1rPcg44jV2xmqGTxTMKZsFgFgUhxtGeSpUt9DiE7pX7k/edit?usp=sharing

- CV repository will be created in next 2-3 weeks. From CESSDA repository to Bitbucket.

Bitbucket provides pipeline options which Oliver can use to set this up on a regular basis (monthly to start with) - Does the script run in the cloud? This may need to be decided by the implementer. This may be a simple docker compose running somewhere.

- The updated docker images would be created each time

- The imported data would be the state of the actual running images of those instances. They are recreated with fresh applications each month or so. We don't need to worry about recovery of those container instances because we will always be able to recreate them.

CV maintenance:

- CVs are created and maintained in CESSDA. Exportable into SKOS

- The CVs are pulled from CESSDA and translated to provide the content in DDI structures. A Bitbucket repository will contains all of the DDI content (SKOS, CodeList, HTML)

- This repository would be updated monthly (more or less) using a Bitbucket pipeline which takes the CESSDA SKOS and creates the formats needed by DDI

RDF vocabularies:

- XKOS is currently maintained on GitHub and just requires a pull

- URI resolution will need the new stack. But the actual writing of the files is taken on by CESSDA as part of the SKOS.

- Similar to the ELST thesaurus at least to the publicly visible site

- pubby and Apache Jena Fuseki is stored as triples in a graph database and can handle different vocabularies like JSON

- https://dbpedia.org/page/The_Guardian

General questions/comments:

If the docker container get moved to a different host?

This is just the infrastructure. Within the restart we need to ensure that a fixed address of that infrastructure would be kept. We would need to bring this into the namespace of the DDI Alliance

A triple store with sparkle end point and puddy will provide a wide range of formats.

ACTION: Darren needs to go over it, add on cost items, if no substancial changes we can then finalize and contact Jared

ATTENDEES: Wendy, Oliver, Dan S., Flavio, Darren

Resolution work:

Darren and Oliver will meet tomorrow

Oliver has been digging into the details of Darren's suggestions as well as how GESIS manages data content

Barry and Wendy will do a zoom edit

Goal is to have these prepared by mid September

Upcoming:

- CDI model will be done in a few weeks but documentation still needs to be completed and packages

- Codebook issues regarding

Work tasks starting in October/November:

This is a list of development areas we have identified where work has been done during the Moving Forward Project that should be reviewed for incorporation into Lifecycle. The approach will be to idenitfy members interested in specific areas and then soliciting a broader group to work on the topic outside of the regular TC meeting (similar to Codebook approach). The items noted as priorities are those that should be addressed prior to the spring in-person meeting as they will have some impact on how Lifecycle 3.3 is serialized into 3.4.

- After CDI review is done look at Lifecycle data description again in terms of logical data sets and how files were made in the 70's and how that could be simplified along with alignment with CDI (PRIORITY)

- Data cataloging at different levels

- Geographic specification

- Questionnaire improvements aligning response domains with the variables they create, output parameters (select many - allowing a variable to reference a specific response) clearer mapping of data through the capture and process system

- NCubes and NCube definitions and we now have different levels of variables. Compatibility with SDMX 3

- Couple different types of physical records, substitution groups, clarification and simplifications. Aggregates with definition. (PRIORITY)

- Process models

Things to think about for 3.4 work:

- Alternate namespaces in substitution group

- Complex nested choice sections

- Use of abstract classes in 3.3 helped a lot in preparing 3.4

- Upper model work needs to continue - how products mix together (PRIORITY)

ATTENDEES: Wendy, Jon, Oliver, Larry, Barry, Dan S., Darren, Flavio

Resolution System:

- Reviewed changes to the DDI Resolution document. Notes were made on document

- Darren and Oliver will meet in the next week few weeks in terms of recording how the URN and URI content will be resolved and how different versions will be represented (this is particularly important in terms of CV content).

- In regards to serving XKOS that is relatively straight forward

- SKOSmos usage

- Underlying content (metadata schema)

- It ultimately comes back to the hosting question

- Do we have anyone specific in mind to to host this (someone's existing cloud or setting up a new cloud account that someone will manage

EDDI update:

EDDI deadline for submissions a couple of weeks away

There are plans for a training event done before or after EDDI

SDTL would be interesting

It would be useful to have something on URN and http resolution - general route, what we're doing and why we're doing it - Dan could submit on the registry base work being done by Colectica

CDI vote:

CDI will probably be delivered to TC in early September. While trying to meet the end of August date, early September is probably more realistic. They need it finalized prior to the Dagstuhl workshop. The vote period is only 2 weeks so delaying in order to provide the webinar for voters should not cause problematic delay. It should also still allow time to put out Codebook 2.6 out for public review prior to EDDI without overlapping the CDI vote period.

Thinking ahead:

How do we want to organize other work tasks noted in the workplan? Continued work on comparison and mapping suite products, DDI Alliance pages, and Codebook future structures. Also for longer term goals, for example, how to identify and set up working groups on development work from Moving Forward that needs to be integrated into Lifecyle and possibly Codebook in the future (Questionnaire, data description, geographic description, separation of logical and physical clarification and simplify, descriptive content for codebook).

ATTENDEES: Wendy, Dan S., Larry, Flavio, Barry

CDI:

What changes have been made since pubic review?

- Minor changes depending on what is being introduced

- Might be some larger changes - which may need review by TC

- Not new features so not a need for public review

- TC review - would be helpful

- Module separation for example, linking approaches

- Units of measurement is new but essentially a refinement of a controlled vocabulary

- Controlled Vocabulary clarification of reference

- TC should review for comparative conflicts, alignment with other products

- TC needs to be aware of consistency in terms of identification and referencing, controlled vocabularies and other cross cutting ideas and approaches

Are there additional features of UML being used?

- Canonical XMI restrains the amount of EA UML that can be used

- We need the document that describes which elements can be used

Webinar for DDI voting members should focus on:

- How it fits into the DDI Suite - role

- How does it do its roll of integration - what is supported in terms of content

- How does CDI fit into the suite of products - addition not a replacement of lifecycle and codebook

- Practical example of what it is intended to do

- Do we have a use case of putting CDI together with codebook or lifecycle - focus on the integration piece

- The webinar could turn into a promotional (what it can do for you)

Approach of Codebook alignment with Lifecycle:

Codebook is descriptive in nature while Lifecycle takes that descriptive content and adds machine-actionable content to better support searching, management, access, and metadata driven processing. Therefore Codebooks descriptive content should move cleanly into Lifecycle and any actionable content (controlled vocabularies, specific content, etc.) should also be easily transferable.

- Good elevator speech on what the relationship between Codebook and Lifecycle

- In codebook 2.5 we stared adding identification for Lifecycle - identification of Lifecycle equivalents - content equivalence is important - making sure that base type is

identifiable if it's identifiable in lifecycle - This last point is important and will be noted in both the alignment issues and the documentation issues in Codebook to more fully inform users about relationships to Lifecycle on the element/attribute level and in the high level documentation in terms of general use of the specification

FUTURE MEETINGS: Resolution documents will be scheduled for August 19 when Oliver is available

No meeting

ATTENDEES: Wendy, Dan S., Jeremy, Oliver, Darren, Larry, Barry

Guest: Jared

DDI Alliance agency level resolution:

CVs

RDF and other expressions of specifications

Options:

- ICPSR - no, DDI does not have access to server or staff to support this work

- Cloud space with maintenance staffing from member organization - The Alliance currently does not pay for any cloud space

- Hosting and maintenance staffing from member organization

- External hosting/maintenance service

DISCUSSION NOTES:

Needs more specification before we can go out- SKOS mosh can do RDF resolution and HTML resolution

Runs on Linux virtual machine - Might work for controlled vocabularies but won't support specifications (XKOS vocabulary)

- What is described in the Darren/Oliver document is technology stack that would be needed for that type of resolution using 2 Apache tools

It should be enough as it is stored and developed somewhere else

System would be straight forward and served through 1 0or 2 docker images

Should be enough to throw it onto a cluster and then destroy on a monthly basis (monthly update of system software); pull new docker images for the apache tools

Update system would be automated by monthly pull and replace for system

Maintenance of content would be separate from system maintenance Kubernetes

- https://kubernetes.io/

https://www.digitalocean.com/pricing#kubernetes - With the pricing they show there $10/month for cost of maintenance

- Set up is the main effort

- Unlike cloud setup used during Moving Forward where we had to do all the updates which caused some vulnerabilities we had to deal with

- We could get rid of all system updates but using docker images and refreshing system data once a month

- Find someone to initiate and implement that set up

- This still means setting this up

- Is CESSDA a possibility

- Is CV manager dockerized? We think so

- the only interface we would need to the CV right now would be straight forward

- $20 gets you way more than we would need

- droplet is something like a server (virtual service)

- 1 MG CV content, few more Kb for XKOS

- This could scale up by buying larger virtual machine

- Digital Ocean was the one that first dropped out when he searched

- $10-20/month would be an ongoing cost ($12,000-$24,000)

- Set up costs depend on the amount of Kubernetes the person had

If CESSDA helps with set up we know they have a lot of Kubernetes experience

Probably worth paying for that experience and knowledge - Definitely seem to be talking under $10,000 USD

Define image needed, we want to get this set up on a given platform, want someone to set this up, with refresh once a month

1-2 days for a Kubernetes expert $1000 to $1500 per day would be about $3,000 so definitely under $10,000 - Could be set up with a bit bucket repository

Is this refreshment automatically set up in Kubernetes? - John Shepardson and Matthew Morris

- What are we hosting this on - software stack

Apache stack was set for CVs Apache Fuseki is a triple store and won't do full content negotiation - Tools for serving up RDF

- Need can see if Cosmos can deliver for

DECISION

- Pursue the Cloud with the monthly replacement software approach noted in discussion. Identify DDI members who can provide set-up expert ice.

- Write up specification with ultimate deliverables and the time frame

- Maintenance side is something for the Executive Board for on-going costs (cloud space and maintenance cost)

- Provide a nice succinct proposal - Include on-going cost of space and maintenance costs - planned maintenance costs

- If we exceed $2000 amount we would need to go back to the board for approval

- Provide background paper on options for resolution levels currently in the works

- From Jared's perspective the sooner the better to get this through bureaucracy

- We can unofficially seek support from interested people

ACTTION:

Oliver is unavailable until 15 August

Darren will provide draft of the proposal with work specifications with deliverables and time-frame

Wendy will work on finishing the DNS Resolution document for background providing clear levels of activities that currently need to be managed by the agency (in this case int.ddi)

ATTENDEES: Wendy, Dan S., Larry, Oliver, Darren, Flavio

AGENDA:

- XKOS and Paradata groups: topics on which TC support/contact will be needed this year

- XKOS publication process for Best Practices - opportunity to look at any official publication process or a uniform look/feel for Best Practice documents. How to reference an XKOS classification. Look at common features and content.

- Paradata: Opportunity to work with them in terms of preparing new content area where it may be added to more than one product where we want consistency between the models in terms of making sure content is easy to share or transfer between products

- Discussion:

- Formal definition expressed as an ontology or model would be good to work on

Darren would be interested in working on this

CDI has talked about attributes down to the data and how this could be supported by an ontology - Model before ontology would be useful

- Clarity between a measure and a variable and the instance of a measurement, use of conceptual value (instance of a measurement, expressed as an instance value). Paradata has information on the instance of a measurement. Tie into variable cascade.

- Formal definition expressed as an ontology or model would be good to work on

DNS Resolution:

Draft document covering preparation of document on current status of agency resolution, DDI Alliance resolution service for sub agencies and ddi published content, options for resolution by agencies currently supported or in progress

https://docs.google.com/document/d/143tERbtM8Eze-z28jCZMxTm8bHaGfKh6/edit

Comments have been added to the page including additional areas that need to be covered and clarifications. We will work on this over the next month. Target audience is the Scientific Board but content should be usable for informing ddi agencies and end users.

Preparation for meeting with Jared on 22nd

ATTENDEES: Wendy, Dan S., Larry, Oliver, Barry, Flavio

Follow up on last week's action item

- Talked to Barry and Oliver about generating announcements from change log information. This can then go out through Jared. We need to bring him in as that gets further along.

- Oliver is working whether there is an API available to getting change log information. He will be working on the scripting in the coming weeks.

- We can look at and play timing and publication options for Michael. Work with Michael for the best process. With the bitbucket repository it is easy to identify change.

Discussion of next week's Scientific Board agenda

XKOS and Paradata

- Publication targets of Paradata? Lifecycle, Codebook, CDI attributes at various levels etc. Paradata. Do they plan on an RDF representation? Definition of Paradata.

- XKOS time line for the year to 18 months

DDI URN resolution - Status update - goals and policies to be formed

- Is it possible that people want to register but don't have the capability to provide resolution.

- The http resolution of the agency id is the ability for different organizations to create a templated URL for different user services (direct link to DDI, a viewer page) There will be the ability to use the token of the DDI URN to create a URI that can be accessed. This gives agencies a lot of flexibility to do what they need to do.

- No agency is required to have an agency based resolution system. For the web browser resolution you just put in your web site.

- Anyone can register an agency. There is no requirement for resolution. But if they want it to resolve in the future they can update their http uri's to new content resolution.

- The HTTP resolution is scheduled for sometime in September.

UPCOMING MEETING:

Reminder that Jared will attend the 22 July meeting discussing computing and management support for resolution of DDI RDF Vocabuaries and DDI Controlled Vocabularies.

ATTENDEES: Wendy, Oliver, Darren, Larry, Dan S., Flavio, Jeremy, Michael, Sanda, Taina, Barry

AGENDA: Clarification of steps needed to finalize the publication of DDI Controlled Vocabularies on the DDI web site at the level of access currently available. (NOTE: this does not include content resolution of items in vocabularies which is being addressed separately)

STEP 1: getting SKOS output correct from CESSDA

STEP 2: transformation to correct SKOS output, create the CodeList output and HTML

STEP 3: delivery of updated content to Michael for posting on DDI site

Step 1 Notes:

There are identified issues with the CESSDA SKOS content which have been noted by Achim and passed to the TC. Franck Cotton reviewed the SKOS example and validation report, identifying specific problems that required fixing, best practices that should be followed, and other related comments. This information has been passed to Darren and Oliver. While these issues involve the CESSDA system, TC will address the issues in the transformation of the CESSDA output into the DDI published content. Darren will provide this feedback to CESSDA. TC will alter its transformation scripts over time as changes are made in the CESSDA system.

Step 2 Notes:

Tool: is a command line tool that pulls content (SKOS multilingual file from CESSDA); transform to SKOS, CodeList, HTML; prepares package of content to be uploaded to the Alliance site

Options for timing:

- Full fledge update of every vocabulary at a regular basis which would reduce the manual work; only DDI; this would track new language editions; Periodicity can be determined by CV committee (monthly basis)

- Publication basis: Run the command line tool and pass on the file

DECISION: Tool will be run on a monthly basis. This has been agreed upon by Sanda and Taina. The CV group should review during the year to determine if the timing is correct. Periodicity can be adjusted in the future based on this review.

SKOS content:

- Issues around publishing - how to notify users of new publications; there is not metadata providing the equivilent of change files

- The way CESSDA is handling versioning and changes in the metadata is wrong and needs a ground up correction (personal view). This is a long term issue.

- We could address the versioning regarding language information at the DDI level, effectively consolidating and creating a change log.

- In order to fix the versioning and lifecycle management how do we provide uniform versioning system.

- How to incorporate change logs into SKOS and other output

- Example, if you have a URI of a Norwegian label you know the source concept but you don't have information on comparability between version numbers.

- What we actually need is new version of relationships between versions and concepts. isNewVersionOf, isPreviousVersionOf, isSameAs at item level

ACTION: Oliver needs to determine if we have enough information to make these additions, do we need to retrieve separately

ACTION: Wendy obtain and verify versioning of CVs in DDI as stated by group and clarify what will be expressed in DDI

Step 3 Notes:

ACTION: Need to agree on the actual URI pattern we want to use. They are currently wrong and this needs to get this corrected in the CESSDA tool

All files are in one folder and all file names contain the version number in the file name. Need to see what it currently is and suggestion for ddialliance/specificaiton/ codeoff the cv.version. and file format

Considerations --

- This should support having content resolution for SKOS supported somewhere at a different location.

- URI and URN non-machine actionable and machine actionable one.

QUESTION: could DDI site run automatically. What is currently being implemented in JAVA. TC needs to find a location to run that pull.

DESCISION: Run the JAVA within bitbucket. When we are displaying multilingual. Use a bitbucket repository for that retaining all copies. Michael could just run a clone of the repository.

ACTION: Michael and Oliver need to coordinate so that what comes out of transformation is what Michael needs for updating the site

ACTION: Wendy and Barry need to discuss options for informing public of new CV publications and updates. CV group should then work with Barry and TC to ensure that updates are appropriately publicized.

ATTENDEES: Wendy, Jon, Darren, Larry, Oliver, Dan S., Barry

excused: Flavio

AGENDA:

Content resolution work for XKOS and CVs - status and scheduling of meeting with Jared in July

FROM Jared - How about I attend a TC meeting in July to discuss next steps (I should be available the weeks of 12 and 19 July)? We'll want to identify any DDI member organizations that could provide the services you are seeking. Additionally, we should consider preparing for a competitive bid with outside vendors.

- Oliver tried to do the easy approach on a spare server and ran into a few issues

- Somewhere there should be a service provider that should be able to provide what Darren is talking about where we would just maintain the content rather than the service stack. If we could get.

- Need to be sure we can port off RDf resolution to a different server for content negotiation

- Issue of different styles of content - hosted under the same domain name

- Had a look at the current state of XKOS, we only have HTML and full file access. Need to support current link including the RDF-Vocabularies

- We know software stack we want to use

- Ability to name paths

- Don't want to go off with just a short term solution but want to think ahead to something stable

- Member willing to support long term (5 year commitment with proviso that we don't want things scattered - need software stack maintenance as well as content maintenance)

- If we go with a general cloud we need to maintain stack, if a member then they should provide stack maintenance

- We can't mix CV and DDI RDF content resolution up with the broader discussion of a resolution system for DDI instance content (common content) because that has a different volume and different content maintenance needs

- For the July meeting:

- Get clear on what is needed, who could provide it, and if a call for bid for support

- Need to clarify if ICPSR is unwilling or unable to support

- We need to do resolution and presentation of DDI CV as well as RDF Vocabularies

- We need maintenance of management stack

- Maintenance of content

- Versioning of CV content by language is something that we need to deal with as this is separate from this discussion and something we deal with in the publication process

- Be aware of problems becoming captive to them or loss of service and content due to closure

- Clarify with dealing with Michael that it is just the publication of html, options for grabbing full content, but NOT resolutions

- Material from CESSDA output to ICPSR for visual page publication

- SKOS to DDI CodeList

- Tool to download and transformation has been placed in tool

- Periodicity of update (routine updates for anything new - push or "publication driven - pull")

- Oliver will send out an example of what the tool currently is able to provide and then talk to Michael to work out the details from that

- July 15 or 22 - Jon won't be around on 15th

ACTION ITEM: Work with Michael to ensure that content is transformed into page content

ACTION ITEMS:

- Get materials together for Jared prior to meeting

- Ask Michael to July 3 or 10th meeting to discuss publication of CV (standard content - not resolution)

Work on additions to the product pages for Codebook (past development, change logs for published versions, etc.)

Looks good continue with this

Issue with URL resolution resolved

network blip - why schemas should be stored locally for DDI instances

informational you want the canonical but functional you want local

Best Practice issues to addressed

ACTION ITEM: (all completed - wlt)

remove page of topics

- Add label BestPractices to JIRA

- Create public filter on BestPractices label (inform Hilde and Arofan of new label)

- change link to a link to JIRA filter

ATTENDEES: Wendy, Larry, Barry, Dan S., Oliver, Flavio

Some notes regarding specific activities:

CV publication:

Resolution of URI is still a problem with SKOS. Would it be possible to change the URL structure to these to be more in line with CESSDA repositories; adopting the URI part.

Oliver will right up within the week and send it out. Find out from Achim what the validation problem was that he was seeing.

For the CVs is a very simple approach. Implementation is about a day if ICPSR is supporting. High Priority get this out in the next few months

HTTP agency resolution service: Should occur Aug/Sept; grid updated

Product mapping

Identified a few mappings: Variable Cascade; Statistical Classification; Process Model; Data Description; presentation is a primary issue and information may need multiple levels of presentation for different purposes from interpretability to actionability...notes added to grid

ACTION

ADD earlier versions to codebook find and add

NOTE: www.icpsr.umich.edu/DDI resolves to ddialliance.org

https://ddialliance.org/Version2-0.xsd

http://www.icpsr.umich.edu/DDI/Version1-2-2.xsd

https://ddialliance.org/Version2-1.xsd

Have emailed Michael for a listing of all URLs for Codebook schema and dtd instances.

FOLLOW-UP ON VALIDATING URLS:

I mis-moused copying the URL into a browser while we were meeting. http://www.icpsr.umich.edu/DDI/Version1-2-2.xsd does actually bring up the proper xsd.

When I later tried the xml file in oxygen it did validate.

I was trying to make two points

1) having a working URL in the schema location is helpful for files distributed by an archive. That makes it completely clear which schema is correct.

In the example below, the namespace URI (http://www.icpsr.umich.edu/DDI) is not specific to DDI Codebook, being the DDI home page. The version 1.2.2 may no longer be adequate now that version number is no longer tied to product line (Codebook, Lifecycle, etc.). A better practice might be to use https://ddialliance.org/Specification/DDI-Codebook/ for Codebook.

ATTENDEES: Wendy, Darren, Dan S., Oliver, Larry, Barry, Flavio

AGENDA:

CDI review package - update

- Expected delivery to TC end of July

- Contents look like they should cover all requirements including request for the profile of UML usage with which to initiate discussion of long term maintenance of CDI

- Initial decision will need to be whether a final review in addition to the short review included in the vote

Canonical URLs for DDI XSD files - Darren

- Discussion with CESSDA regarding rules for DDI profiles on the use of a canonical format for references to DDI schemaLocations. Problems with some systems have arisen with the alternate use of http and https references as well as the presence or absence of www

- Is there a canonical URL for any if referencing schema location?

- Best practice to have schema files locally; remote schema location can have resolution problems, server down

- Tool should load schema separately from validating instance

- CESSDA wants to guarantee they are referencing the same schema files

- Sounds like it may be better to make sure tools handle variants

- Always have official DDI namespace in the instances which is set in xsi:namespace

xsi:schemaLocation - There are currently various levels of capability in the CESSDA environment

- As for http and https aren't some browsers not opening files with http - definitely an issue in some cases

- Is it be possible that the DDI does not do a redirect but delivering as http in those cases? How is this set up at DDI? Check this out. [testing shows both http and https resolve to https]

Example that bounces

<codeBook version="1.2.2" ID="ESS1e06.6" xml-lang="en" xmlns="http://www.icpsr.umich.edu/DDI" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.icpsr.umich.edu/DDI http://www.icpsr.umich.edu/DDI/Version1-2-2.xsd">

Note that later check using http://www.icpsr.umich.edu/DDI/Version1-2-2.xsd resolved to https://www.icpsr.umich.edu/DDI/Version1-2-2.xsd on DDI site

Tried this header using XMLSpy and it validated.

ACTION: Is there a need for an Apache configuration change? Can Michael send the host definition for the DDI Alliance site. Set up meeting with Michael, Oliver, Wendy, Darren, plus whoever wants.

ACTION REQUEST: Good to have a record of decisions and approach to provide guidance to community - Darren will supply when work is done

SDTL - sample description content

- This is about data sets being used with the appropriate weighting system or systems.

- Stas's proposal had 2 parts. Creating items in DDI that would describe what the weights should be and 2) include the code to be used in Stata or other form that should be used to accurately weight.

- Providing code for guidance is an excellent idea. It is potentially possible to create DDI that could be read and processed by the program to generated.

- However it was very Stata oriented in terms of standard weighting. Had not really broached the subject of complex sample weights (stages, strata, etc.).

- There is a group proposed to extend SDTL to describe generated data through regression etc. using complex weights. Working with experts in this area. Looked at Stata commands for complex weights and the package for R use of complex weights. Made a list of needed content. Came up with a model that could be used by DDI and SDTL that would cover what was needed. Document provides examples of how these would be expressed.

- Stata can actually write these JSON commands.

- If we are interested in putting a full scale description of complex survey weights this would be a model. Still needs some validation. Only way to be sure is to run them with some data for validate.

- Is it package centric? Is there a need to create new content for new or other packages? Statisticians agree with what these things are so a package can be mapped to this content, for example SPSS writes its own XML document which be compatible.

- What is the role of Codebook in terms of guidance.

(Should be reviewed for Lifecycle - with this new content) - Flavio is following up on this - One approach is to identify all the different parameters that were used and describe them

The other is to provide the code to be used for analysis of data for different packages.

Surveys provide a description - to what extent do you need actionability or interpretability to clarify what is needed

Codebook: what is meant by "compatible content" within the definition of what Codebook is intended to relate

- KEY CONCEPT: Descriptive -- Interpretable -- Actionable

- Level of richness requires use of controlled vocabularies

- Target for Codebook should be Interpretability

- There is vocabulary to the parameter names as well as definitions inside the weights

- Even in cross discipline we use the same terms differently. It would be great to have this level of interpretability thorugh controlled vocabulary.

- Another use case that could be possible is to provide tools to help people with these functions if there is interpretable content with that level of preciseness of vocabulary. Having the definitions addresses the problem of obsolete code.

ATTENDEES: Wendy, Dan S., Jeremy, Flavio, Larry, Oliver, Darren, Barry

1) Budget requests finalized - cost estimate

- Only 2 specific items for budget request are TC meeting and Agency Registration update

- Addition of content negotiation service for RDF vocabularies and CV contents will be noted as generating a reqirement for funding but is dependent on where it is hosted and is not something the TC can specify at this time

- Review of document provided on proposed improvements to Agency Registration (this will result in a budget request)

- Agency registration: #3 there is an RFC for service discovery. Just an alternative way to find JASON description of agency services locations (file in a special location)

- If someone has a link to your website and wants to know what agencies have registered (map domain name to agency and publish it on your own web site)

- Agency registration is needed for creating an ID space in DDI

- Should promote when updated

2) Update on XKOS activity

Best Practices and push on usage

- in IUSSP - CODATA working group on the "Ten Simple Rules" for vocabularies

- Digitization of UN standards: launching a Proof of Concept on using XKOS to represent the classifications published by the UNSD (ISIC and CPC mainly)

3) Slide deck for members presentation

reviewed, corrected, ready to send to Jared

4) codebook content review (DDICODE 62 41 72 76 74 51)

Question arising on use in catStat as well as catgry - review discussion, logic, usecase, and decision

change locations: codebook-3 entered 2021-05-17

41

<xs:attribute name="access" type="xs:IDREFS" use="optional"/>

<xhtml:p>The attribute "access" records the ID values of all elements in the Data Access and Metadata Access section that describe access conditions for this variable. </xhtml:p>

8375 added "and Metadata Access" to documentation of "var"

1371, 1397 catgry

8173, 8196 valRng

4582, 4594 invalRng

1256, 1286 catstat

7644, 7658 sumStat

6945, 6955 stdCatgry

3004, 3016 dataItem

check issue for comment that the code for category should cover the catStat. ####

ATTENDEES: Wendy, Flavio, Jeremy, Dan S., Larry, Darren, Jon

AGENDA

Funding requests due into Jared May 24

--revised meeting funding request

--How the URI are received and ICPSR support for resolution

--Agency resolution work

--Should be part of the common infrastructure - can we put the costs that we are aware of i.e. what may need funding and a possible indicative cost (8 lines of config file have been provided)

Dan will write up description and send it out to everyone so we can look at it

Presentation to Members meeting - base on SB presentation for next year and add past year work including:

--3.3 published 4/15/2020 so actually end of previous year - remove

--2.6 work

--CDI publication work is proceeding

--Pages - products and subset of Learn (revised Product section - standardized information set for each product, Current product overview and developing products overview); Learn/Resources: Markup Examples reviewed and placed in separate database linked from each product version page as well as general searchable list; Tools - began review of contents, underlying database, and filtering issues; Glossary - discussions regarding content and upkeep; Relationship to other standards - reviewed content and where possible links should go; DDI Profiles - reviewed considering approach for this work; Resources page: content and links to sub pages as well as other pages in different locations

--SDTL published 2020-12-01

--DDI coverage and conceptual model initial work

----defining each product within the suite in terms of coverage area and application support

----conceptual model of DDI coverage area (draft - model and initial work on mapping product pieces to model)

--Initial review of the COGS work done and are revising the ingest text

Notes on clarifications for work plan and any document to groups outside of TC

--enumerating more specifically

--group under thematic area (clear disaggregation)

--anything that provides more clarity of why something is being done and what it try to achieve will be helpful

ATTENDEES: Wendy, Jon, Oliver, Dan S., Barry

AGENDA:

Funding request: Meeting? Topic?

--Process: Once Jared has requests the SB will be reviewing those related to scientific workplan/agenda; providing some comments on priorities in terms of the strategic plan

--TC face-to-face meeting - first half of 2022

could align with NADDI as we normally hold in Minneapolis

Oliver, Darren, Jon, Johan - overseas

Flavio, Larry - local

We need to meet - note items in the roadmap (2.6 and 3.4) 2.6 should be out by then

Describe an area of work - focus to be determined

We need to have a limited set of objectives so that we can get a focused set of work done rather than being too broad

--Cost of hosting a resolution service for RDF vocabularies and CV content

--Cost of hosting COGS system (probably a next year piece)

--HTTP base resolution - improvement to the software (a week or 2 of work) - Dan will write up for next week

Response from DDI-CDI

--sounds like a reasonable approach

--we will expect to have information on current processes and the profile of UML objects/features used so that we can begin discussing this with MRT once they have the package ready for the voting and publication process. Note that with Flavio, Larry, and Oliver on both committees we can obtain some of this information informally through them.

Resource Pages

--Discussed general approach

--Noted that an important process will be sorting out current content to the appropriate location (tools and examples are currently a mixed bag of content). This includes organization of underlying databases (number, coverage, content) to best support content and access

ACTION: (Wendy) As more work is done on resources pages I will create a page on the wiki to present and discuss approaches

UML modeling in CDI

--Oliver wrote the CDI to XML tooling - repository link - Achim took over work so not sure if what is published is up to date

--Separate UML models independent and dependent (ex. XML or RDF specific) structures - Flavio would be the person

--Modeling only done in independent and transformed to dependent

NEXT WEEK:

Funding requests - have estimate and draft statements ready for discussion

ATTENDEES: Wendy, Jon, Oliver, Darren, George, Dan, Flavio, Flavio

Preliminary discussion of DDICODE-52 please read the proposed solution

Note that this is focused on the use of weights in analysis

--stata has the sloppiest syntax of the set

--SAS has a lot more content on weights and move moving parts

--SPSS look at the CSPLAN content which is more detailed but is convoluted

--Does it need to be referenced/described by variable statistics? can statistics point to these? do these complex approaches apply to summary statistics? If the variable is tagged with the weight that it should use it is assumed when there is a single set of weights.

--Does there need to be something to clearly differentiate weighting approach

--There is work with MIDAS to determine how to present which weight was used

George getting in touch with Stas regarding SDTL work

Use of Slack in DDI

- would it be more appropriate to have in JIRA - we can integrate Slack into JIRA

- If someone posts a question on Slack someone can cross post

- Jon is on and will keep an eye on Question section to respond or file a JIRA issue when needed

- Slack can be used in a browser

- You can attach Slack channel to a JIRA tracker

Tools page update - ideas for content and maintenance

- EDDI, NADDI, and IASISST requests to file their tools

- there are things that are long dead - add an updated field that indicates that the person was contacted etc. Jon is willing to do this once a year. So update...3.0 cannot be implemented

- No longer available status ...what is the point of having it there

Implementation of maintenance - Note to DDI-CDI group

- Is this a process we need to talk about this from scratch

- No there isn't anything specific

- Bring in the broader discussion

- Bring up the material from the Dagstuhl meeting in October 2017

- There was a UML model originally for Lifecycle but there was the problem of keeping in sync

- UML centric CDI model actually ended up with a platform specific model and platform independent model

- Right now everything doesn't need to be done the same way but we need to know what the process is and how to transfer it between groups

- Cloud solutions for tools in the future for version control etc.

ATTENDEES: Wendy, Jon, Dan S. Darren Oliver, Barry, Flavio

https://docs.google.com/document/d/1DuYMk2n8GSfbxjlEjweDuw42Y29Ork2npt6Oq-jQOyQ/edit?usp=sharing

varRange - assumes that the continuous variable range is in the order they appear in order of what is in the data file; not always the case. Documentation must clarify that this is useful ONLY in conjunction with physical layout information for a specified instance

57 has nothing to do with SDTL but with the SDI work. Notify George.

CDI vs MRT

What is the remit? How does the remit of MRT overlap with TC as described in the ByLaws

CDI needs to look at the longer term maintenance in terms of tooling and how changes are recorded and changed

Right now there is one person doing changes or is funelled through one person. This was what we were trying to get away from. What do they envision their process will be like in the future?

Rapid prototyping was the goal and why we are switching to COGS.

XKOS is a bit different as it is pretty open through the gitHub tools

It is under version control. Could put it into different formats.

Jon will draft up some notes on this so we can get it to CDI as soon as possible for them to respond to so we go into pubiction with an idea of where this goes.

ATTENDEES: Wendy, Darren, Larry, Jon, Michael, Oliver, Flavio, Dan G, Barry

Discussion of providing a means of resolving DDI RDF vocabularies (currently CVs and XKOS) down to the vocabulary instance level. Darren and Oliver will both be available. Goals of this meeting are to clarify exactly what is needed and a process for achieving this in the near future.

Controlled Vocabulary Issues:

Currently, notional URIs exported as RDF (SKOS) content from CESSDA Vocabulary Manager do not resolve anywhere e.g. https://ddialliance.org/Specification/DDI-CV/AggregationMethod_1.1.html#Sum . Secondly, these are HTML URLs rather than URIs functioning as resolvable persistent identifiers. Thirdly, there is currently no RDF endpoint infrastructure at ddialliance.org to support URI resolution.

There are multiple problems including the delay in getting new versions published (example used was a version 1.1 where most current published version is 1.0

DDI system currently does not provide a means of resolving to an vocabulary instance endpoint. We also have minimal clarity on what should be provided as an endpoint to an RDF vocabulary (html product https://rdf-vocabulary.ddialliance.org/xkos.html) or a .rdf expression such as Terse RDF Triple language (Turtle)

Getting to the end point, the object is still a problem (RDF end point going to a item)

RDF Endpoint:

Goal is to get a snippet of RDF data, whether by direct query or as embedded RDF in HTML pages.

HTML is not particularly useful for developers

Needs infrastructure to present and RDF endpoint to resolve URIs such as Apache Fuseki

2 things to be solved:

1-CESSDA tool needs a proper resolving mechanism for what is within the tool

Oliver address that in a team meeting at GESIS regarding understanding of RDF stores

Someone else needs to help Sigit to identify and implement right entry

2-What do we need to do to publish the DDI vocabularies

DDI vocabularies need to get published on Alliance end (they could be managed and edited elsewhere prior to publication)

DDI needs to support resolution of Alliance URIs

We need to port everything out from CESSDA Vocabulary Service as a SKOS or SKOS-XL file.

DDI uses the CESSDA CV manager as a means to edit and manage versions of DDI CVs. DDI is responsible for the publication and resolution of the DDI CVs.

CESSDA CV manager needs to be fixed

The standard export of SKOS has some internal issues

Original CESSDA vocabulary has a URN that resolves within the CESSDA system (but only to an HTML page). The data model currently in use at CESSDA will be challenging to transform straightforwardly into SKOS(XL) structures. Additionally, the object model is predicated on the idea of one CV object per language per version, which does not completely align with DDI treatment of language as an object property.

The client capability of the CV service is that the client carries the traffic for the URI

The URI being generated into the RDF could resolve to an endpoint outside of the CV service

If we set up an RDF container just consuming the RDF that gets exported from the CV manager and give that one to client we could route back to the CESSDA system for resolution. Set up a proxy rule for setting up the way to tunnel to the CV service. However, this does not solve the issue for other DDI managed RDF products such as XKOS or RDF expressions of other products. This will be a growing issue.

Would ICPSR be willing to set up an RDF container

Currently DDI uses static HTML and XML files on a server. The current server is set up only to support Druple pages

It is a Linux Apache web server

The web site publishing could work in the same way to static files - SKOS to update into HTML and other formats

The whole point of DDI using the CESSDA tool was for editing and version management support which in the past was a fully manual process.

We need to create a native environment that supports resolution of RDF endpoints; separate RDF store able to resolve DDI Alliance URL/URI

--We need to have an RDF store. The question being WHERE? We had a cloud server at one point for Lion

We need to talk separately about editing and publishing. Editing will continue on CESSDA tool for now as it meets our needs. Changes in the system, particularly output changes, would need to continue to meet the needs of the DDI CV group

Suggestion for approaching this:

- Our discussion is mixing editing with publishing. Currently editing is tied to CESSDA CV tool functioning. TC needs to focus on publishing and what that needs to include (in addition to static pages) - can someone write down what the infrastructure that could act as RDF store and publisher

This would help ICPSR to determine how and if they could host it - see who is prepared to host such a thing

RESOLUTION:

Clarify performance requirements, workloads for a publication and resolution system

CESSDA remains the editing and version management platform

ACTIONS:

- Darren will draft up requirements for software stack for publishing endpoint - before next Friday SB meeting. Oliver will assist. The document should explicitly mention Apache modules that can be used to support this work

- There is a concern that the data model in the CESSDA tool is not able to publish in the format we need. TC will need to monitor this and be prepared to provide alternatives.

- Michael works primarily with static web content. He would need to bring this to Jared in order to make the case to get time and developers to work on this if ICPSR is willing to provide this service.

ATTENDEES: Wendy, Dan S., Oliver, Flavio

Reviewed the entries in Codebook bitbucket related to CV and text consistency work. Dan will do a more detailed review and validate. The next areas of Codebook work are some specific issues for extended content.

Went through first draft of document commenting on workplans submitted to SB. Added some points. Wendy will update and post for additional review and comments. Sending to SB next week.

ACTION:

Wendy will contact Darren for availability next week to have a discussion of steps needed to get a resolution system for RDF content supported at DDI Alliance. If he is not available we will set up a separate meeting.

ATTENDEES: Wendy, Dan S., Darren

Discussed the Codebook changes for CVs and added notes on conclusions

Controlled Vocabulary issues - DDI - Confluence (atlassian.net)

Looked at CESSDA SKOS extracts at

https://vocabularies.cessda.eu/vocabulary/ModeOfCollection

Information will be transferred to specific DDICODE issues as relevant

ATTENDEES: Wendy, Jon, Dan S., Flavio, Larry, Oliver

Update from SB meeting

URN resolutions - This should be high on our list in terms of the technical work to achieve URN resolution for CVs and XKOS. SB is looking at some of the broader policy issues regarding the level of support the Alliance should provide. What this would involve and cost.

2021/22 activities - provide some more detail in terms of things that need to get done; set some priorities

Reviewed the TC presentation identifying priority issues for TC. Wendy will relay in review of all group plans and development of overall plan from SB for approval at Scientific Community meeting.

Additional information on web page work including content model for DDI overall

- Showed Training Group organization chart for the pages under LEARN. Helps to inform where the pages TC is responsible for will fall. Checking with Jane on some specific questions about the former Getting Started page and responsibility for the parent page Resources

- Discussion of Mapping and definitions of different levels of mapping (what is mapped, how its mapped, what the mapping is used to support) in the context of the Getting Started page content and what the TC is doing with additional levels of the Overview of Current Products page.

- Wendy will incorporate notes from discussion and post for additional comments. See Mapping DDI

NEXT MEETING

North America goes to DST on Sunday affecting meeting time next week

No items this week to follow over to next week

Jon will check for agenda items - early next week.

If no agenda items the meeting will be cancelled

ATTENDEES: Wendy, Jon, Dan S., Jeremy, Oliver

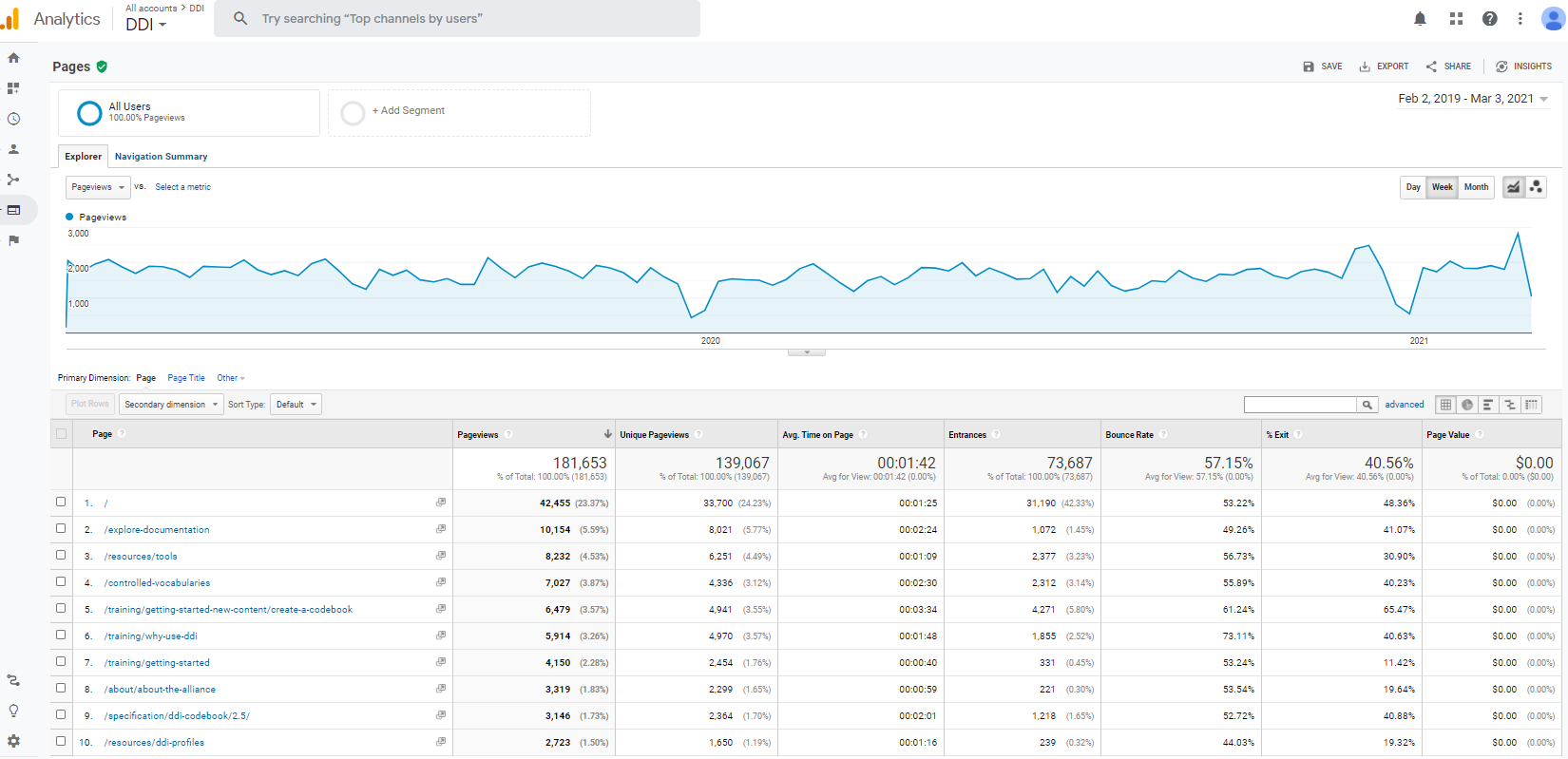

Getting Started Page discussion:

- What is the current purpose of the page

- Is anyone looking at it (send Barry request for

)

) - Task oriented

How might this page be used?

- New collogues - starting to work with DDI data what would you like to have here

- Developers - For getting started developing with a particular product - would this be better served in another area say through products with developer information which is more product/version specific

- Business deciders - Does DDI cover what I need to do?

- Boss said to use DDI and so they need to find out about it

- Or new in this job and want to provide a place to go to get general impression of DDI

- Assuming we are targeting people who are doing a specific task and are coming to the web page with zero knowledge

Conclusions: This page is intended for the a person with zero knowledge (new potential users), so what do they need and how might this be presented?

- High level UMLish picture of what are the main things DDI works with (studies, questionnaires, data sets, question banks, variable banks, content management

- Describe with some prose - what is inside the box that is labeled DDI what can you expect from that

- List of use cases -

Information needed to describe task work:

- What products can be used - how each supports

- What fields - why, how to use them, how others use this information

- tools and resources should be linked with filters not repeated

This cannot just be refreshed and needs to be reimagined in the context of the current site. Need to look at what are we trying to do here - get a clearer idea of what this is trying to accomplish - how does it tie to the overview with the application perspective

ACTION: write up an approach to reorganizing

ATTENDEES: Wendy, Dan S., Larry, Oliver, Flavio

Codebook content review:

line 4604

Its set as mixed why is that? should just be a string with attributes (mixed="true") remove

Avoid mixed content where possible

language code attribute provide source code - will this change